We need to take following steps to do this:-

1)Create a windows 32 console application project.

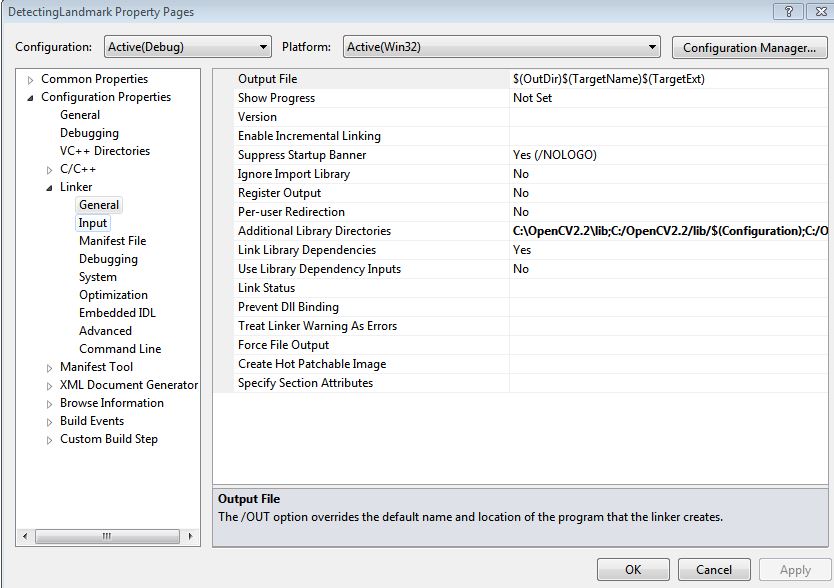

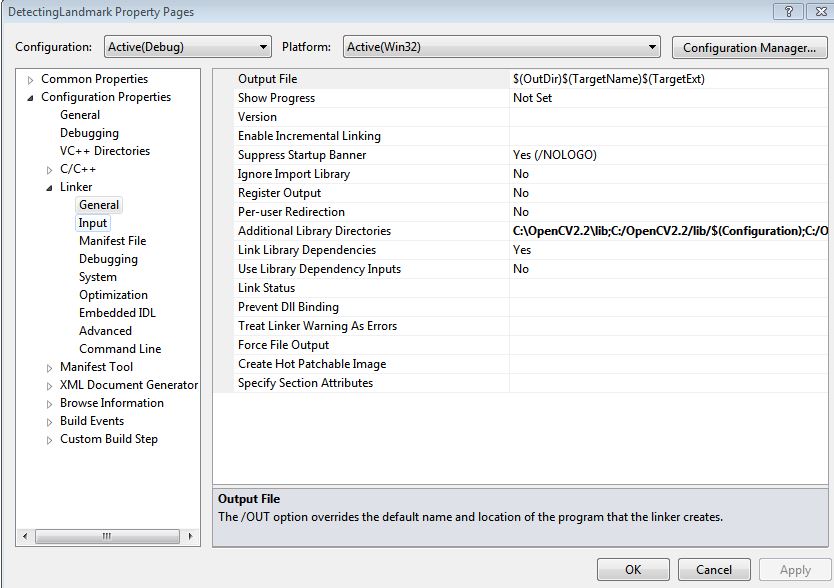

2)We need to add following dependencies to the project properties->configuration properties->linker->input->Additonal dependencies

opencv_core220d.lib;opencv_highgui220d.lib;opencv_imgproc220d.lib;opencv_legacy220d.lib;opencv_ml220d.lib;opencv_video220d.lib;

3)We need to add following directories to the project properties->C/C++ ->general->Additional include directories

C:\OpenCV2.2\include;C:\OpenCV2.2\include\opencv;

4)We need to add "C:\OpenCV2.2\lib" directory to the project properties->configuration properties->linker->input->Additonal library directories

C:\OpenCV2.2\lib

1)Create a windows 32 console application project.

2)We need to add following dependencies to the project properties->configuration properties->linker->input->Additonal dependencies

opencv_core220d.lib;opencv_highgui220d.lib;opencv_imgproc220d.lib;opencv_legacy220d.lib;opencv_ml220d.lib;opencv_video220d.lib;

3)We need to add following directories to the project properties->C/C++ ->general->Additional include directories

C:\OpenCV2.2\include;C:\OpenCV2.2\include\opencv;

4)We need to add "C:\OpenCV2.2\lib" directory to the project properties->configuration properties->linker->input->Additonal library directories

C:\OpenCV2.2\lib